Methods

© Laurent Arthur |

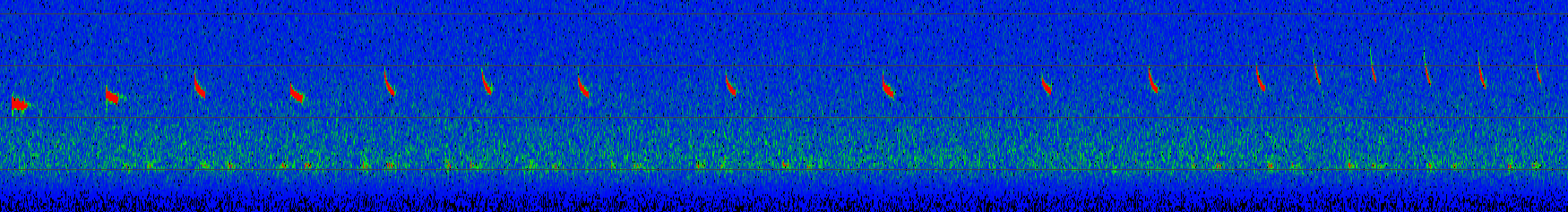

Recordings of bat echolocation calls are easy to collect

Acoustic recordings provide quality data to model spatial distribution

|

|

For this project, acoustic recordings from anywhere in geographical Europe are welcome. It will be important to obtain recordings from areas with no activity of the target species, as well as from areas with medium and high activity from these target species. Data

Metadata required

Data filtering

|

|

|

- This project opens the opportunity to review the most common uses of settings and machines in Europe.

|

|

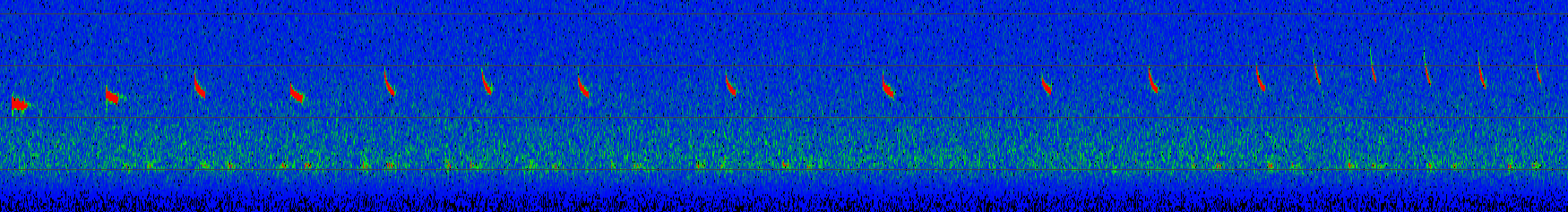

Human identification of bat echolocation calls can be of very good quality but it also brings observer bias (i.e. two observers might not agree on the identity of the same sound sequence). The quantity of data and observers in this project makes it impossible to control observer bias. This is why we chose to use automatic ID. Although not perfect, automatic ID makes it possible to assess the error rate, which can be used to sort out data (e.g. all sound sequences with an error probability superior to 0.1 will not be used) or to associate data with weights (e.g. sound sequences with a higher error probability will be assigned a lower weight). Moreover, automatic ID allows the re-analysis of the whole dataset in a reproducible way in the future, using this process:

|

© Cyril Schönbächler |

Species distribution will be predicted with random forest models. Connectivity between hotspots of activity will be inferred using randomized shortest paths.

In this project, a very large dataset of acoustic recordings will be analysed. This context makes it possible to work with some noise in the data:

Variability appears in data due to the type of measure or analysis: this is called noise.

Noise is not always a problem: the law of large numbers says that noise can always be compensated by the quantity of data collected.

Bias appear in results when the noise is correlated with the studied variables.

However, if the correlation is small enough, then this bias is insignificant! If this correlation can be measured, and if it is not too strong, then this bias can be corrected through modelling!

–> With big datasets, it is important to perform the analysis with different thresholds of error probabilities in the automatic ID, to assess the robustness of results (Barré et al. 2019).

The collection of data will demand efforts from partners and from the coordination team. These data are complex (multiple metadata to take into account), heavy (especially the sound files) and numerous. This is why a Data Management Plan was written to ensure that data management will follow the most up-to-date practices and international recommendations. This will also guarantee that these data are handled in a foreseeable and secure way.